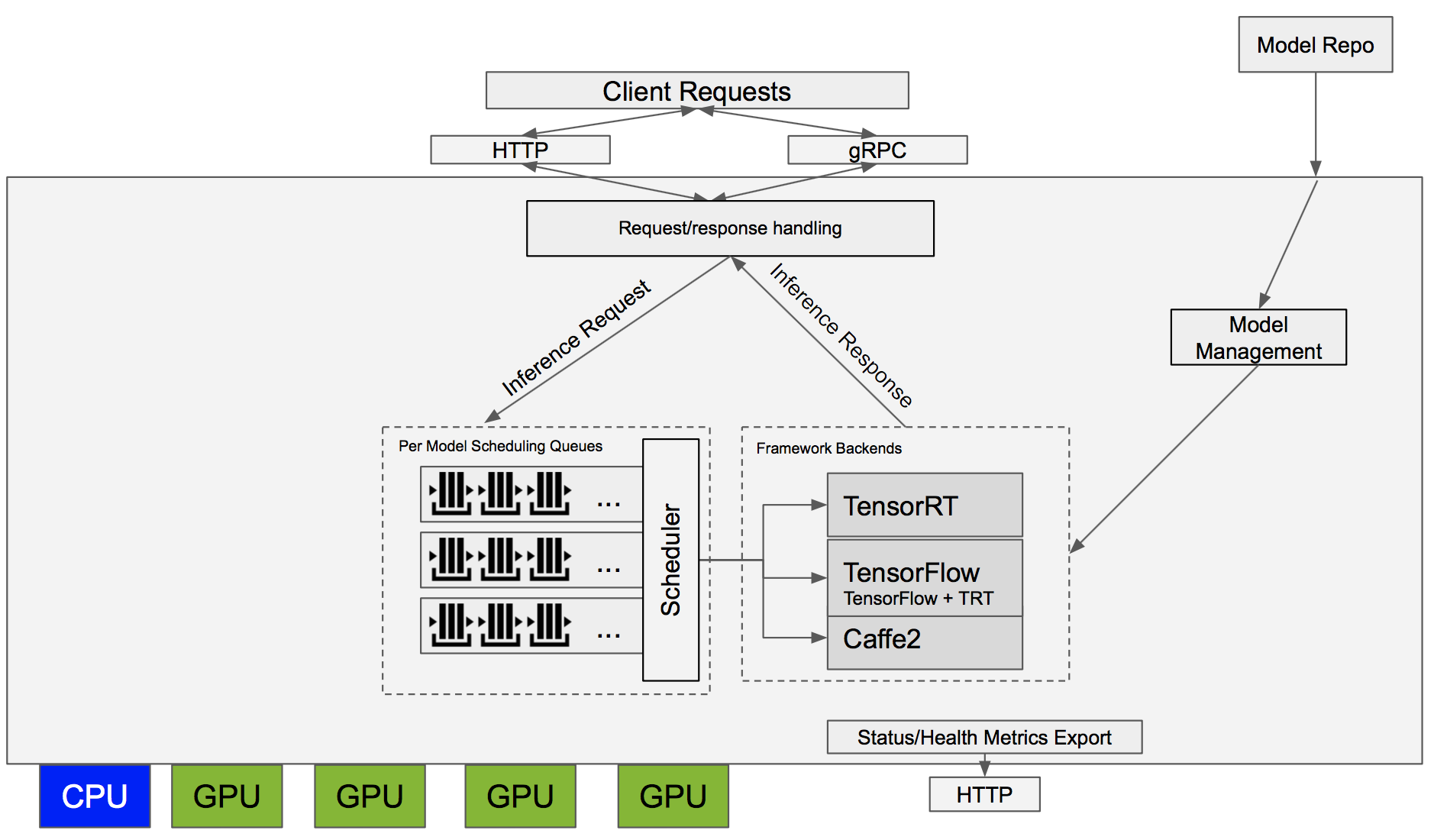

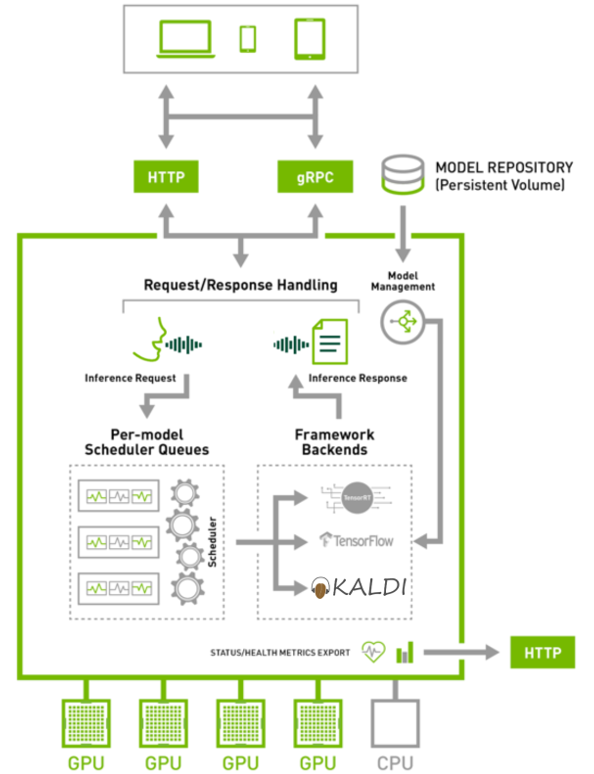

Accelerated Inference for Large Transformer Models Using NVIDIA Triton Inference Server | NVIDIA Technical Blog

Simplifying AI Inference with NVIDIA Triton Inference Server from NVIDIA NGC | NVIDIA Technical Blog

Deploying Diverse AI Model Categories from Public Model Zoo Using NVIDIA Triton Inference Server | NVIDIA Technical Blog

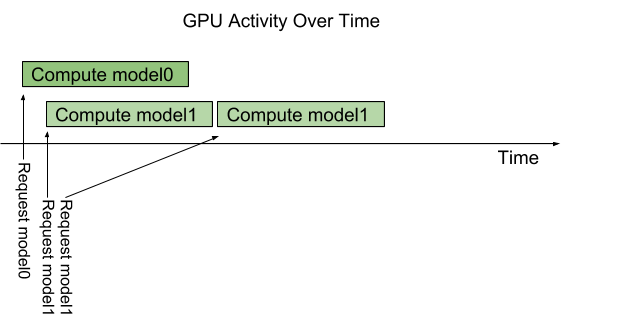

Serving ML Model Pipelines on NVIDIA Triton Inference Server with Ensemble Models | NVIDIA Technical Blog

Serving ML Model Pipelines on NVIDIA Triton Inference Server with Ensemble Models | NVIDIA Technical Blog