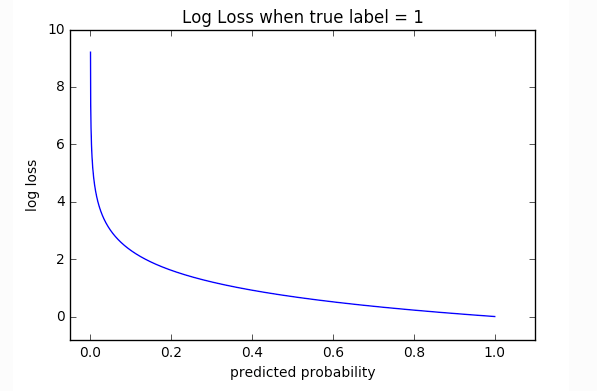

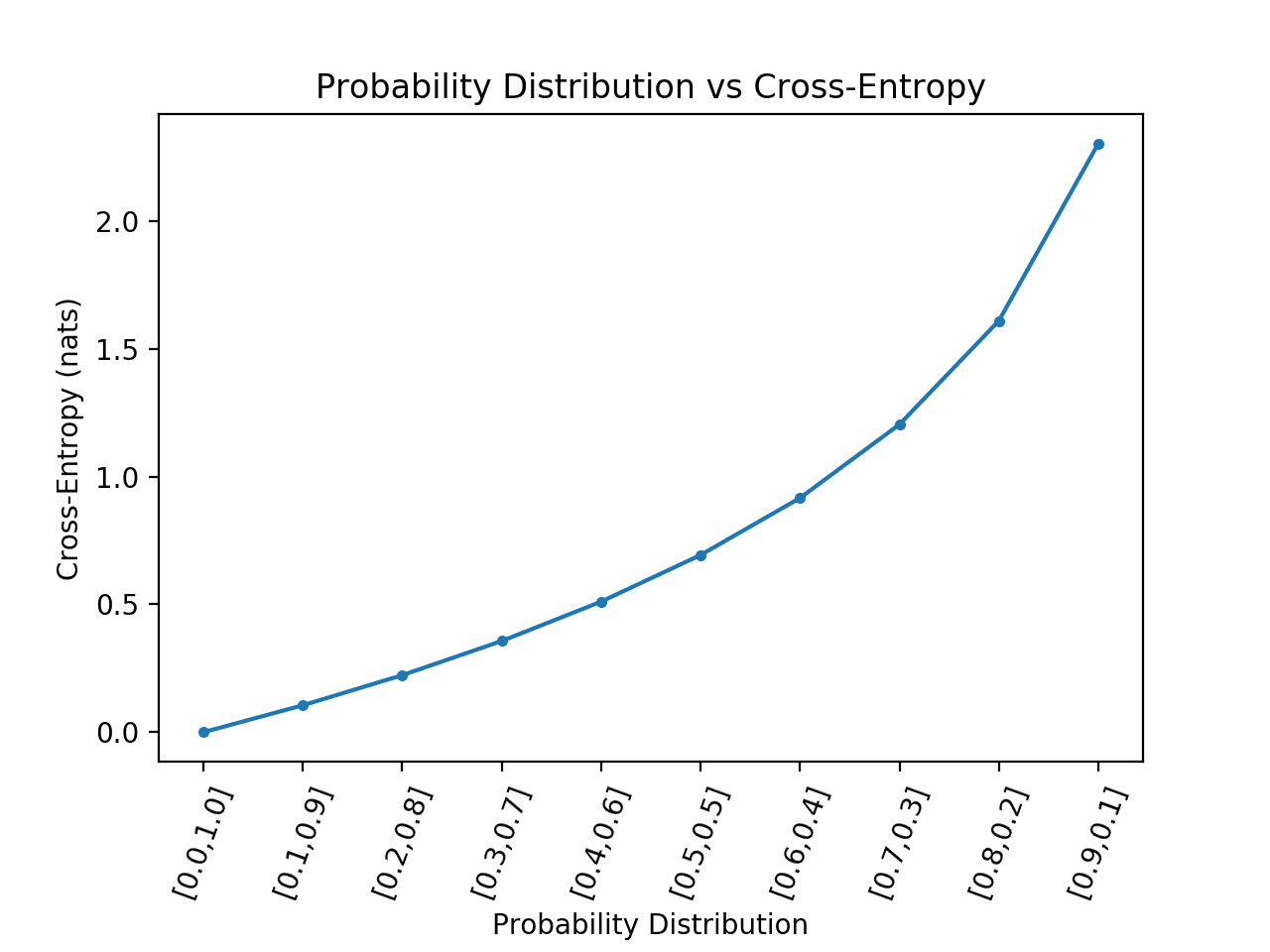

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

Understanding Sigmoid, Logistic, Softmax Functions, and Cross-Entropy Loss (Log Loss) in Classification Problems | by Zhou (Joe) Xu | Towards Data Science

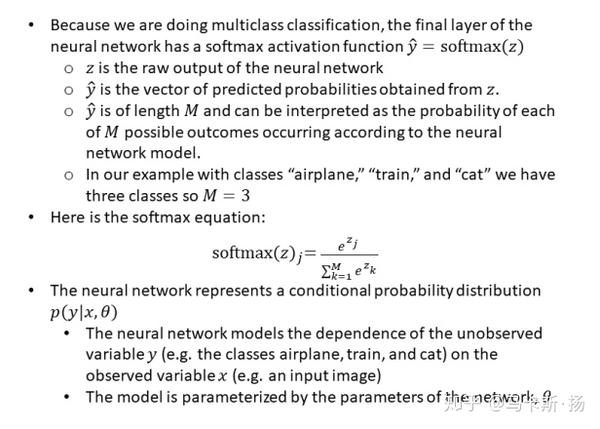

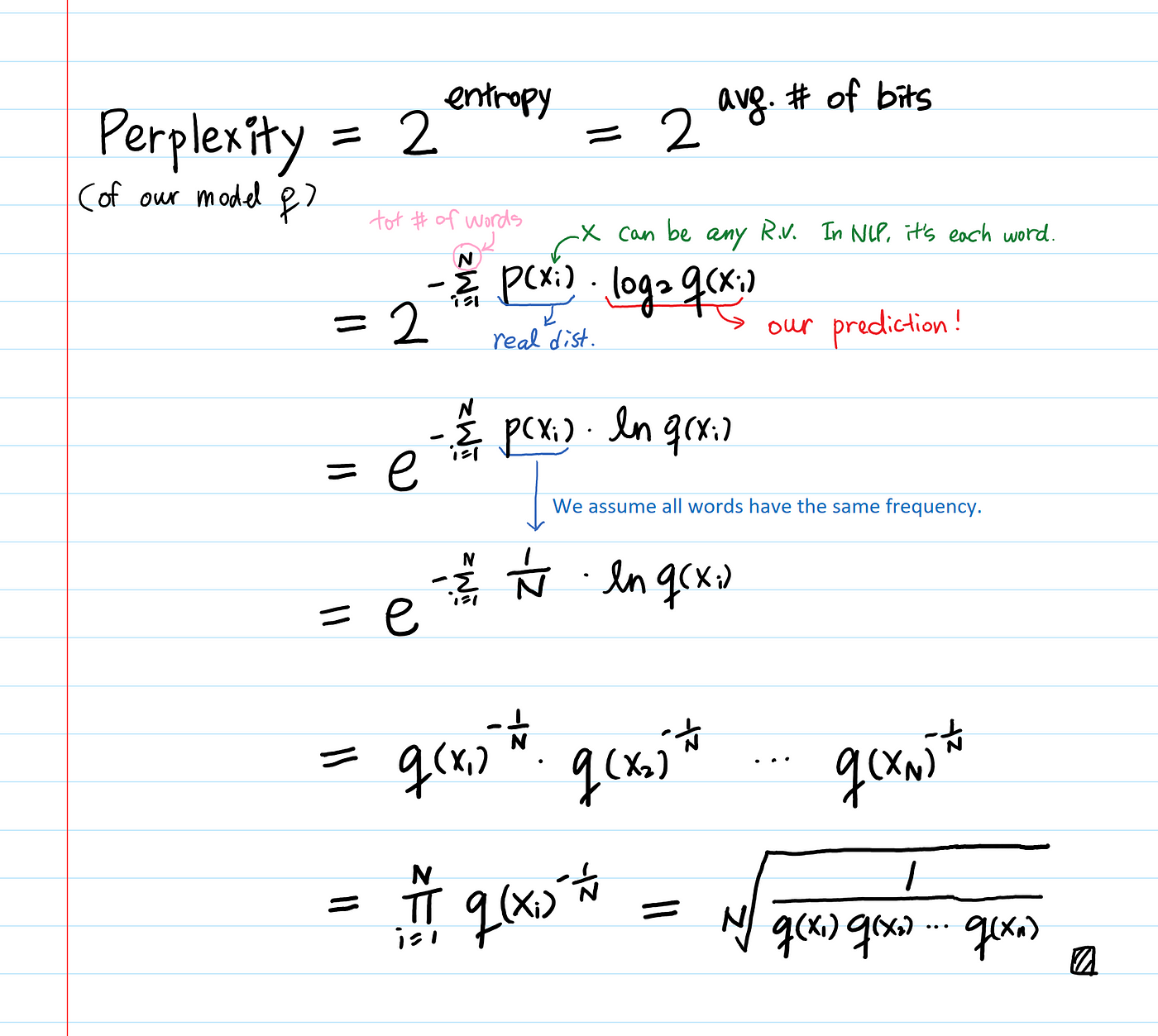

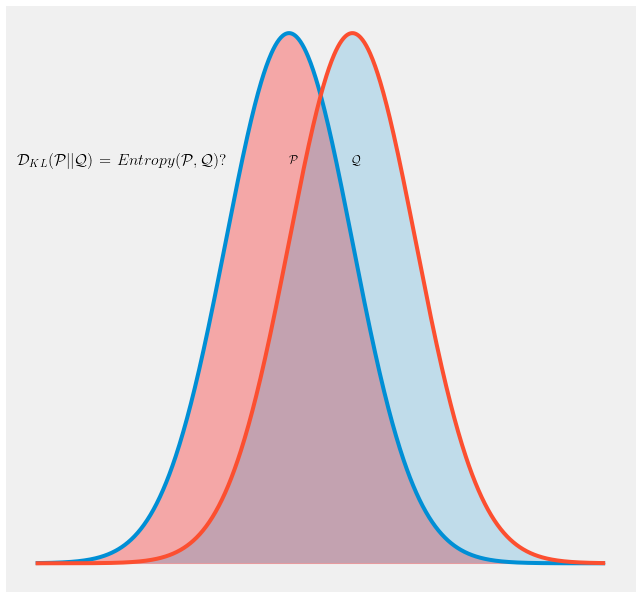

Connections: Log Likelihood, Cross Entropy, KL Divergence, Logistic Regression, and Neural Networks – Glass Box